Neuronal Ensemble Memetics

Table of Contents

- Summary So Far

- Why Neurophilosphy Is Epistemology

- Notes On How Neuronal Ensembles Are Different Replicators From Genes

- Reasons for brains / The success algorithm

- The Science Fiction Software Engineering Approach

- Kinds of Computation / Notations

- Magical Interfaces, The Principle of Usability

- Memetic Engines Create Competence Hierarchies Up To User Illusions

- The Principles of Biological Programming

- The Layers So Far

- I: Mechanical Level

- Ia): The substrate and the wiring

- Ib) : The Neuronal Ensembles

- II The Software Level

- IIa: The Memetic Engine

- IIIa): The Living Software

- IIIb): The Langauge And The User, Elegance, Competence, Abstraction, Computational Cybernetic Psychology

- IV: The Cognitive Machine: A Simulated World With A Cognitive User Inside It

- 'Activity That Survives' Spans Mechanistic, Software, User And Biological Function Layers

- The Living Language Problem

- Explaining Cortex and Its Nuclei is Explaining Cognition

- Pyramidal cell activity - The Gasoline

- Neuronal Activity shapes the Neuronal Networks

- Cell assemblies and Memetic Landscapes

- Tübinger Cell Assemblies, The Concept Of Good Ideas And Thought Pumps

- Input Circuits And Latent Spaces / The Games of the Circuits

- The Biology of Cell Assemblies / A New Kind of Biology

- The Structure And Function of The Cell Assemblies Is Their ad-hoc Epistemology

- Memetic Epistemology

- Cell Assemblies Have Drivers For Generality and Abstraction

- The Computational Substrate of Cell Assemblies Makes Them Merge

- Context Is All You Need?

- Building Blocks / Harmony / Selfish Memes

- Confusion and Socratic Wires

- The Sensor Critics

- Rational Memetic Drivers (they must exist)

- Analogies

- Symbiosis by juxtaposition

- Symbiosis by reward narrative

- Why Flowers Are Beautiful, Elegance, Explanation, Aesthetics

- How to build a stability-detector?

- (Getting Philosophical For a Moment)

- Questions:

- The Structure And Function of The Cell Assemblies Is Their ad-hoc Epistemology

- Implementing Perspective, p-lines

- Some Memetics On Eye Movement And Maybe How That Says Where

- Speculations On Striatum, Behaviour Streams

- A High-Level Story for Midterm Memory Retrieval

- What To Do With A Dream Mode

- On Development

- The Boltzman-Memory-Sheet

- The Slow Place: Evolutionary Drivers For Mid-Term Memory

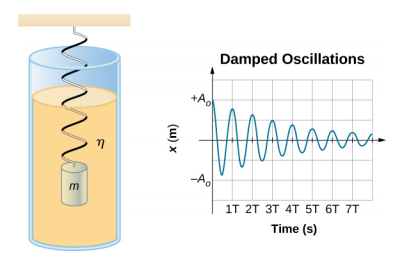

- Creating Sync Activation

- 'Reactive Phase' Property (Hypothetical)

- Re-ignite From A Shared Paced Nucleus

- Ignite or Re-Ignite From Inputs

- Suspend And 'Auto Compose'

- How To Make Use of Synchronous Activation

- Earl Miller 'Cognition is Rhythm'

- Composition / Neural Syntax

- Sync by Overlap Kernel

- Synchronous Activation Might Not Matter That Much

- Questions

- Coherency Challange Model of Working Memory

- Absence Detectors, Counterfactuals, Imagination States

- joy lines

- A toy idea for a meme-pruning algorithm / A Candidate Dream Mechanism

- Notes on The Agendas of Memes

- Baldwian Spaces and Darwinian Wires

- The Puzzle of The Hemispheres

- Smart Wires Vs. Random Wires

- Machine Intelligence Ideas

- Remembering In Roughly Two Steps

- More Rants On Neuroscience And Computation

- The Brian as an Activity Management Device

- Further speculation on the circuits of a holographic encoding

- Cortex, evolution

- Hypervectors

- Cell assemblies and hypervectors

- Simple Predictor Loop

- Cognition Level Mechanisms

- Lit

You can see the nervous system as a big computing system. This was McCulloch's big step.

- Heinz von Foerster

The cortex is a knowledge-mixing machine.

- V. Braitenbgerg

—

Page is notes from a phase of multiple months of explorations. Lot of stuff here that I don't subscribe, find either lame or embarrising now.

Most is Not straight on the point as I was developing the idea.

Highlights (stuff that will stay with me):

- Why Neurophilosphy Is Epistemology, Actually, it's not that bad. Setting the tone.

- Memetic Engines Create Competence Hierarchies Up To User Illusions

- The Living Language Problem

- Input Circuits And Latent Spaces / The Games of the Circuits

- From this especially A Curious Arrangement

- Contrast And Alternative Neuronal Ensemble Memetics actually is a framework that might predict system neuroscience nuances, like the pattern activation that Murray Sherman Talks about here.

- The Biology of Cell Assemblies / A New Kind of Biology (kinda the core idea, that memes are biology)

- Some Memetics On Eye Movement And Maybe How That Says Where:

- The notion that eye movement data are intertwinend with the position of visual scene objects.

- The notion that memes must play an affordance / how to be used / maximal range of behaviour game.

- Memes are buttons with previews

- Coherency Challange Model of Working Memory Terser here: overview (under "Coherence Challange Model of Working Memory").

- Creating Sync Activation, I learned about Buzsáki since then. Funny, Suspend And 'Auto Compose' with the phase reset turns out to be 'correct'. It's simple enough to build into a vehicle, which would be very cool next project.

—

Intros to neuronal ensembles:

- https://youtu.be/2ApDpHQI6_I?si=fdtj_jzdQ8apfctH,

- https://youtu.be/fIk6jQn9Cds?si=5fta0s6BJzfl0PYZ,

- https://youtu.be/X9roMB8nCbo?si=0B04oUSey53XRaPM

—

Evolving notes on cell ensembles, possible meme-machines and models of cognition.

A software engineering angle that wants to be biologically plausible.

This page is the philosophy behind Conceptron (eventually a formalism of a Cell assembly computational framework described here). And my toy world visualization things of this in the browser.

Also:

- Hyper dimensional computing (HDC is a more principled approach to some of the aspects talked about here, eventually, I expect to work on how to combine some of the nuggets of this page into dynamic, agent, self-organizing, 'memetic' HDC frameworks)

- This is way more terse with some of the same stuff: Neuronal Memetics Overview.

- Youtube Video

- assembly-friends#4 the latest playground, this is an early 'conceptron' with some sensor balls. What are the beeps? See How to build a stability-detector?

- Synapse Turnover

- Traveling Waves

Before we write the algorithm, we know what properties the algorithm has. What the desired output is for a given input. For an explanation generator, we precisely can't do that, because we don't know what the new explanation is before we have it.

So the task is different. What is needed to achieve that? I don't know.

We only know from the nature of universality that there exists such a computer program, but we don't know how to write it. Unfortunately, because of the prevalence of wrong theories of the mind and humans and explanations of the theory of knowledge and so on… all existing projects to try to solve this problem are in my view doomed. It's because they are using the wrong philosophy. We have to stop using the wrong philosophy, and then start using the right philosophy. Which I don't know what it is. That's the difficulty.

David Deutsch in a podcast talk.

—

Terminology:

I started out calling them Cell Assemblies because Braitenberg used that term from Hebb (1949). The idea is over 100 years old. Different people used different names. I learned from Rafeal Yuste1 that

- Sherrington termed the name ensemble before Lorente (who called them 'chains') and Hebb ('assemblies').

- Biological Ensembles are not Hebbian (they don't rely on Hebbian Plasticity). (See Alternative Plasticity Models)

This is why I start calling them Neuronal Ensembles now. And I don't bother with modifying all my notes here. (As is the nature of evolving ideas. The old cruft only changes when it is touched).

—

I will try to make it a philosophy of biological software, and see if that will work.

I imagine an alien spaceship with something like an alien high-tech cognition ball floating in the middle. The ball has an outer layer we call 'thinking-goo'. The are some smaller 'thinking balls' at its base, pulsating happily in its rhythms of information processing. The technology is advanced yet simple and beautiful. In hindsight, it will seem obvious how it works. Instead of explaining the brain in terms of the latest (primitive) technology, I want to explain the brain in terms of yet-to-discover ideas on the nature of cybernetic psychology. This way the stupid computers of our time only expand, and never limit, what I am thinking about.

"We Really Don't Know How to Compute!" - Gerald Sussman (2011).

What kinds of thoughts do we need to think in order to explain and build things like brain software?

Summary So Far

I would argue that how neuronal activity is translated in thought is to me the most important question of neuroscience. How do you go from a bunch of neurons being activated to mental - to mental cognition?

And how do you build a thought? What is a thought?

The critical level is the neural circuit level. The nervous system is built from molecules to synapses, to neurons, to circuits, to systems, to the whole brain… Of course, everything is important, but if you want to go for this question "What exactly is a thought?"

In my intuition that is the neuronal circuits where it's at. And you need modules. So these ensembles could be modules that could be used by the brain to symbolize things.

Rafael Yuste

Brain software can be understood in terms of a hyperdimensional computing framework, creating software-level entities made from neuronal activation that replicates across neuron timesteps. I argue these are the replicators (memes) [Dawkins, Dennett, Blackmore, Deutsch] of neuroscience. (see The Biology of Cell Assemblies / A New Kind of Biology)

This cell assembly memetics is software but understood in terms of a kind of biology. The cell assemblies are living entities, which need to have strategies for surviving, competing, and harmonizing. They have a structure and function of their own, which I call their 'ad-hoc epistemology'.

A network of neuronal units has meaning by being connected to sensors and motors [Braitenberg 1984, cybernetics, Stafford Beer The purpose of a system is what it does (POSIWID)]. If a subnetwork is connected in a vast hyperdimensional derived point in meaning space, then this is what it means.

Brain forms groups of connected neurons, or microcircuits [Schütz and Braitenberg, 2001; Shepherd, 2004]. They are also called ensembles, assemblies, cell assemblies or attractors and exhibit synchronous or correlated activity [Lorente de Nó 1938, Hebb 1949, Hopfiled 1982, Abeles 1991, Yuste 2015].

These cell assemblies form spontaneously, they emerge out of simple 'Hebbian substrate' models [Vempala]. They are a high-dimensional representation of the inputs, and can be seen as data structures in a computing framework, where the fundamental operation is pattern complete [Dabagia, Papadimitriou, Vempala 2022]2. (Although, recently Yuste and collaborators showed that Cortex might not use Hebbian Plasticity3, see Alternative Plasticity Models).

They are self-activating pieces of the subnetwork.

Note that correlated activity depends on the time window, too. We say neuronal ensembles have temporal structures (Braitenberg). Neuronal ensembles might represent mental content, thoughts, concepts, expectations, ideas, symbols and so forth.

Neuronal ensembles are compositional. They can be made from many sub-ensembles. In programming, we call that the means of combination, neuroscientists might like to call this (and are calling this4) the notes and melodies, harmonics of the symphony of the brain.

Yuste calls them the alphabet of the brain. We call them a datatype. He is not a computer scientist.

We see that depending on the network, you can find a 'maximal neuronal ensemble', which would be an epileptic seizure if active in the brain, but shows a connectedness property of the network (and it is exactly the point of a corpus callosotomy to prevent the spread of epilepsy).

Conversely, we see that all network activity at any given moment in time is a subset of this maximal cell assembly. It is useful at times to consider the complete network activity as a single ensemble, which I shall call the complete neuronal ensemble (presumably, there are 2 of them split-brain patients, but depends on the time window, too).

That is, neuronal ensembles are made from multiple sub-ensembles. All neuronal ensembles can be considered to be sub-ensembles of a wider ensemble. That is a larger ensemble composed of more sub-ensembles and/or with a larger temporal structure. Up the complete ensemble of the network.

Cell ensembles can be connected in just the right way to the network to mean things corresponding to actual models of the world. To see this, imagine a few alternative sub-networks. Some will stay active, even though the sensors change. They will represent causal entities in the world.

Braitenberg musings The synaptic structure of the nerve net will approximate the causal structure of the environment:

Macrocosm and microcosm. Insufficient as this picture of the cortex may be, it is close to a philosophical paradigm, that of the order of the external world mirrored in the internal structure of the individual. If synapses are established between neurons as a consequence of their synchronous activation, the correlations between the external events represented by these neurons will be translated into correlations of their activity that will persist independently of further experience. The synaptic structure of the nerve net will approximate the causal structure of the environment. The image of the world in our brain is an expression that should not be understood in an all too pictorial, geometric sense. True, there are many regions in the brain where the coordinates of the nerve tissue directly represent the coordinates of some sensory space, as in the primary visual area of the cortex or the acoustic centers. But in many other instances, we should rather think of the image in the brain as a graph representing the transition probabilities between situations mirrored in the synaptic relations between neurons. Also, it is not my environment that is photographed in my brain, but the environment plus myself, with all my actions and perceptions smoothly integrated in my internal representation of the world. I can experience how fluid the border between myself and my environment is when I scratch the surface of a stone with a stick and localize the sensation of roughness in the tip of the stick, or when I have to localize my consciousness in the rear of my car in order to back it into a narrow parking space.

(The Common Sensorium: An Essay on the Cerebral Cortex, 1977).

From simple biological reasoning (to prevent epilepsy), this activity needs to be kept in check, presumably by some area-wise (or global) inhibition. (For instance by only allowing a fixed amount of neurons to be active at each time step). This allows for parallel search mechanisms, which will find the best-connected subnetwork, given the context. [Valentino Braitenberg 1977, Guenther Palm 1982]. (Also called threshold device, inhibition model, a hypothetical oscillatory scheme of this is called though-pump; See Tübinger Cell Assemblies, The Concept Of Good Ideas And Thought Pumps).

This inevitably leads to the view that this activation can survive, i.e. replicate across neuron timesteps. Hence, the cell assemblies can be analyzed in terms of abstract replicator theory [Darwin, Dawkins]. The simplest meme is activate everybody, producing epilepsy - a memetic problem. Note the simplest pessimistic meme is activate nobody. This meme will immediately die out and not get anywhere.

How does activation have strategies? By being connected in the right way to the rest of the network. By being connected in just the right way that is supported by the current interpretation of the network. Cell assemblies complete for 'well-connectedness'. In a network where the connections have meaning.

This yields a fundamental, high-level understanding of neuroscience, its activation flows and circuitry; Unifying brain-software with abstract replicator theory and hence biology. The purpose of activation flow is to replicate itself. It needs good strategies (free-floating rationals) [Dennett], in order to be stable across time. Being on is the only thing the memes care about, fundamentally.

The brain creates the substrate to support a second biological plane, the cell assemblies; With their software-logic biology. They will spread whatever neuronal tissue there is available. They will try to be stable and bias the system to be active again. For instance, they will simply spread into a flash drive neuronal tissue, when available. There is only one thing you need memes want to be active. If they can inhibit their competitors, they will do so. If they can activate their activators, they will do so. They don't care about some other neuronal tissue, they only care because their competitors might find excitation support from that other tissue. If they can make the system stop thinking, they will do so. They will lay association lines towards themselves if they can do so. More speculative ideas on some circuits: (A Curious Arrangement).

Whatever cell assemblies are active can contribute to cognition, the rest of the network is important only because it supports all possible activation. All activation is meaningful because it flows into motors ultimately. Humans with their explanation seem to challenge this idea at first glance, until you realize that even a person coming up with a really interesting internal state of ideas in a deprivation tank, must come out of that tank eventually to share their idea. Alternatively, we build new kinds of sensors and BCIs, which make it possible to broadcast one's ideas out of one's internal states. But then we have arguably crafted a new kind of effector.

We can expect that the Darwinistically grown part of the brain implements mechanisms that shape the memetic landscapes, dynamically so perhaps. The wiring of the brain must create selection pressures for memes that make them about being an animal in a world; Otherwise, a brain would not have been useful evolutionarily. There is no guarantee that this arrangement will work, and we can observe many failure modes; Superstition, false-believes, and so forth. What we can say is that the memes we see around are the memes that play the game of circuits well. Therefore the structure and function of the cell assemblies will be their ad-hoc epistemology; Their connectivity is the knowledge they contain, about how to replicate in the network. If the network is biased in the right ways, this knowledge will represent knowledge about the world and being an animal in it.

For instance, a cell assembly that is on for all visuals of bananas and the smell of bananas and so forth has a wonderful strategy. It is connected in the right way to banana things so that it is on for all bananas, it is allowed to represent the abstract notion of bananas. Not losing sight of the fact that this is biological (hence messy), that the ideas need to grow and so forth; Truly abstract, timeless concepts can only ever be represented as approximations.

This banana meme is a competitor in the game of the circuits. If it can bias the system to think of bananas more, it will do so. If it can bias the system to make explanations and stories, using bananas as an analogy, it will do so. Memes want to be implicated in as many interpretations as possible.

The cell assemblies have their own replication rules; For instance, they will merge (associate) with other memes, leaving their identity behind.

The interpretation game

Cell assemblies can be 'Situations', 'templates', 'schemata', 'expectations', and 'queries', providing context. The software does 'interpretation', 'pattern complete', 'filling the blanks', 'results', 'autocomplete' or 'expectation fulfilling'. 'Confabulation' and expectation are the fundamental operations in this software framework, then.

The means of abstraction [Sussman, Abelson 1984] in this software paradigm are 'situations'. Allowed to be small or big, stretch across time and so forth.

Believes and explanation structures can be represented as 'expectations', shaping which memes are active across sensor and meaning levels. Memes can be said to participate in the interpretation game, a series of best guesses of expectations, or explanation structures. For instance, the belief I see a blue-black dress, is allowed to be instantiated in the expectation, of seeing a blue dress.

Figure 1: The dress blueblackwhitegold

If there is a cell assembly that activates blue and is activated by blue in turn (it is the same cell assembly, stretching across meaning levels), if it is stabilizing itself by inhibiting its alternatives (see Contrast And Alternative). If it also makes the system stop moving forward in its interpretation, i.e. it makes the system fall into an attractor state, makes it stuck in the interpretation 'I see a blue dress'. If it also stabilizes inside the vast amount of network not directly influenced by the sensors, which outnumber the sensor areas by a factor of roughly 100 in Cortex (see Explaining Cortex and Its Nuclei is Explaining Cognition), it will be on, even though the user closes their eyes, and it will encode a position and an object via the expectation of what the user sees when they open their eyes again (see Getting A Visual Field From Selfish Memes). We might say 'The system believes that there is a blue dress'.

Cell assemblies are allowed to be temporarily allocated sub-programs, which are self-stabilizing and represent an interpretation. From the anatomy of the cortex, we see that motor areas, sensor areas, and 'association' areas are all wired in parallel (see Input Circuits And Latent Spaces / The Games of the Circuits), so what I call 'higher-meaning-level' really are equal participants in an ongoing 'situation analysis'.

In a joyful twist of reasoning, perception is created by the stuff that we don't see. Just as science is about the explanation structures that we don't see [Popper, Deutsch]. Perception is a set of expectations, each being supported by and supporting in turn sensor-level interpretations. Note that this situation analysis always includes the animal itself, in the world.

In order to get output you might say the system is predicting itself in the world, including its movement. Although below I label a similar concept commitment instead. (Speculations On Striatum, Behaviour Streams).

Memes want to be active. From this, we see there are memetic drivers for generality and abstraction, a meme that is on in many situations is good.

Children will overgeneralize the rules of language. Analogies are allowed to grow. Memes with pronunciation (words) will want to be pronounced more. Further, there are higher-order memes that will look for these general memes and have agendas for such memes to be general. (see Cell Assemblies Have Drivers For Generality and Abstraction). This is because a meme that is associated with successful memes is active more.

Some memes want to be good building blocks, they want to fit many situations, and they will be symbiotic with other such memes that 'fit well together'. For instance, the memes represent the physical world like objects, their surfaces, their weight, whether something can be put into them, whether they can be balanced, whether they melt in the sun and so forth are symbiotically selected by being part of larger memeplexes, representing the explanation structure of the physical world, I call such an ad-hoc abstract language of the physical world common sense. The best memes will play the interpretation game by composing abstract building block memes in elegant ways.

This is software engineering reasoning, we will see that abstract memes, and memes that are composable with other memes (memes that make good languages together), will be useful software entities.

Tip of the tongue might be a leaky abstraction of this eval-apply paradigm. The cognitive user creates the situation "I remember and then I speak the remembered thing". The remembering (query, result, load) machinery fails for some reason and the situation, the procedural template is made salient (presumably because this is a trick of the system that usually helps with remembering), since "I speak the remembered thing" is part of the template, it's saliency is a failure of the system to completely hide the details of the workings of this software paradigm. (What we call a leaky abstraction in programming). The cognitive user then feels like they would be able to move their tongue muscles, using the retrieved data, at any moment. In our ensemble paradigm, we assume that there are neuronal ensembles that represent the expectation structure "Retrieve something from memory and speak it".

(The memes will play the game of the circuits in order to reproduce. If the circuit is laid out in clever ways, a meme might be forced to play a different kind of game. See Input Circuits And Latent Spaces / The Games of the Circuits for some ideas on thalamocortical circuitry and what kinds of memes it produces).

Assuming a memetic landscape with bias on navigating an animal in the world;

Memes have drivers for using the computer they run on, without understanding the computer they run on for speed. Why speed? Because the first meme which makes the meme-machine stop thinking (Contrast And Alternative for a candidate mechanism), is good.

Memes might be said to participate in the interpretation game. That is, whatever meme is putting the brain into a stable interpretation simply wins (also called attractor states).

From top-down reasoning, a meme engine that creates a virtual simulated world and runs a high-dimensional computing framework should create user-level entities, which use magical interfaces to the rest of the computer. Why this is a mechanism to build magic interfaces, see Memetic Engines Create Competence Hierarchies Up To User Illusions.

In brief:

Consider the alternative, a meme that uses the computer clumsily is discarded. Similarly, a computer-level meme which is hard to use, is discarded. The overlap of optimism and confidence yields magic interfaces. Where the user-level entities are allowed to produce half-confabulated ideas, which are filled by the rest of the meme engine.

- Make a simulated world (one of the fundamental goals of this software).

- Try out everything a little bit (high dimensions and parallelism make this easy) all the time.

- Reward information flow which somehow has to do with navigating the world as an animal. I.e. using the motors smartly and having smart ideas about what the sensors mean.

and 2. are a natural selection algorithm. First, all kinds of meanings are possible a little bit. In the second step, the meanings that were useful is left over (I.e. subnetworks that were connected in just the right way to mean something, for instance how to use the computer, for instance how to retrieve stuff from midterm memory, or how to pay attention to something).

Note that this algorithm can only do what the computer can do. If the flash drive module of the computer is gone, this algorithm will not develop mid-term memory for instance. Consequently, user-level entities cannot dream themselves into great powers, they are constrained by what the computer can pull off.

- You will select high-meaning-level software entities, which are competent, fast and confident. They want to be wizards, they want to use the computer without knowing how the computer works.

- You will select low-level software entities, which are abstract, general and harmonious (a kind of building blocks, a kind of language). They want to be magical. Then they can be used by many other memes. Then they can be on.

I think there is a reason why we speak of ideas sometimes in biological terms the seed has been planted, the idea is budding. It is because the ideas are biological entities, they are replicators.

Brain software properties:

The computer we run on is fast (parallel) (something like 100ms to recognize a new object)

Cell assemblies can find interpretations within a few neuron timesteps. This stuff is fast and parallel. Every theory of what neurons do needs to address this parallelism in my opinion (or not be a better idea than cell assemblies).

Thought pump mechanisms can make a global, parallel search. Finding the best interpretation available to the system.

Assembly calculus makes multi-sensory integration trivial. The same arrangement will represent the combination of sensor inputs just as well. (Given a neuronal area with multi-sensor inputs).

Brain software is used seamlessly (literally feels like magic).

(Memetic Engines Create Competence Hierarchies Up To User Illusions)

Brain software supports feelings, hunches, and intuitions

Cell Assemblies happily represent 'vague' information states, pointing in a general direction without details.

The fundamental computation of cell assembly memetics is 'situation interpretation'. If there is a situation analysis, which stretches across a large situation, and is vague in some way, that looks like a hunch or intuition to me.

What kind of circuitry is needed to make a 'long scale' situation analysis? Open questions.

One piece of the puzzle will be the hippocampus, for sure: (See The Slow Place: Evolutionary Drivers For Mid-Term Memory).

My current idea is that whatever the medial temporal lobe is doing, it seems to be part of grounding us as animals in the world. I.e. feelings, hunches, long-scale situation analysis, possibly credit assignment.

The stuff of ideas is infinitely malleable. It can be put together in vast amounts of ways.

This is supported by a high-dimensional, dynamic computing framework. The leftover question is how to grow the knowledge inside such a framework.

A single piece of explanation can change the mind of a person forever. Like natural selection does it to biologists.

The way that the Gene's eye view and the Extended Phenotype of Dawkins did it for me. Also called Socratic Caves. Whatever piece of explanation one has seen, it cannot be unseen. I.e. you don't go back to a cave.

I don't know yet what brain-software is needed to support this.

A single instance of a piece of knowledge is sufficient to be used by the brain software in the future

A mid-term memory is necessary for this to work [see cognitive neuroscience on patient HM.].

Cell assemblies form after very few neuron timesteps [Vempala]. If the brain keeps some information states alive for a while, it can represent its inputs to itself, and form cell assemblies.

In general, memes will want to spread into a flash drive (mid-term memory), if available.

We can assume that brain software is using its midterm memory to represent situations to itself, even single instances of them. This way the brain can create explanation structures, being frugal with the amount of input needed.

One might muse about how to build such a system, perhaps marking 'unresolved' memories, then replaying them over and over. Perhaps in a sleep mode, one could try out different kinds of perspectives and explanation contexts and so forth, until a causality structure, explaining the situation is found. From the memetic drivers of generality and abstraction, we observe that such internally represented causality structures, what you might call a mental model, will now want to be part of as many explanations as possible. In other words, there are memetic drivers for more fundamental explanation structures.

- Children over-generalize language rules when they acquire language

- Brain software can represent counterfactuals, hypotheticals and imagination states

Brain software can take on different perspectives, which can immediately "flip the interpretation" ("globally" ? )

For instance when walking down a street in a new city and suddenly realizing one was walking in a different direction than one was thinking.

Brain software seamlessly switches between high-resolution details and low-resolution global views

For instance when remembering a trip. Hofstadter paints the picture of seeing first the mountain tops of memory, the highlights and global views, then zooming in and lifting the fog in the valleys. 'Like this day of the trip…'. Now the perspective switches and more detailed memories come to mind.

- Brian software creates a virtual simulated world, which represents the real world, the animal and its mind (internal affordances and so forth). It is useful to conceptualize this as the user interface of the brain.

- Brian software supports decision-making and explanation-making software entities.

- Brain software has a multi-year early developmental phase together with its hardware.

Brain software has a second mode of operation, called sleep and dreaming.

Sleep is essential for brain health (Walker 2017 summarizes the field). An explanation of Brian's software will include satisfying accounts for what happens during sleeping and dreaming (presumably multiple things).

Why Neurophilosphy Is Epistemology

From David Deutsch The Beginning of Infinity. Socrates dreams of talking to a god, revealing a Popperian epistemology to him.

SOCRATES: But surely you are now asking me to believe in a sort of all-encompassing conjuring trick, resembling the fanciful notion that the whole of life is really a dream. For it would mean that the sensation of touching an object does not happen where we experience it happening, namely in the hand that touches, but in the mind - which I believe is located somewhere in the brain. So all my sensations of touch are located inside my skull, where in reality nothing can touch while I still live. And whenever I think I am seeing a vast, brilliantly illuminated landscape, all that I am really experiencing is likewise located entirely inside my skull, where in reality it is constantly dark!

HERMES: Is that so absurd? Where do you think all the sights and sounds of this dream are located?

SOCRATES: I accept that they are indeed in my mind. But that is my point: most dreams portray things that are simply not there in the external reality. To portray things that are there is surely impossible without some input that does not come from the mind but from those things themselves.

HERMES: Well reasoned, Socrates. But is that input needed in the source of your dream, or only in your ongoing criticism of it?

SOCRATES: You mean that we first guess what is there, and then - what? - we test our guesses against the input from our senses?

HERMES: Yes.

SOCRATES: I see. And then we hone our guesses, and then fashion the best ones into a sort of waking dream of reality.*

HERMES: Yes. A waking dream that corresponds to reality. But there is more. It is a dream of which you then gain control. You do that by controlling the corresponding aspects of the external reality.

SOCRATES: [Gasps.] It is a wonderfully unified theory, and consistent, as far as I can tell. But am I really to accept that I myself - the thinking being that I call ‘I’ - has no direct knowledge of the physical world at all, but can only receive arcane hints of it through flickers and shadows that happen to impinge on my eyes and other senses? And that what I experience as reality is never more than a waking dream, composed of conjectures originating from within myself?

HERMES: Do you have an alternative explanation?

SOCRATES: No! And the more I contemplate this one, the more delighted I become. (A sensation of which I should beware! Yet I am also persuaded.) Everyone knows that man is the paragon of animals. But if this epistemology you tell me is true, then we are infinitely more marvellous creatures than that. Here we sit, for ever imprisoned in the dark, almost-sealed cave of our skull, guessing. We weave stories of an outside world - worlds, actually: a physical world, a moral world, a world of abstract geometrical shapes, and so on - but we are not satisfied with merely weaving, nor with mere stories. We want true explanations. So we seek explanations that remain robust when we test them against those flickers and shadows, and against each other, and against criteria of logic and reasonableness and everything else we can think of. And when we can change them no more, we have understood some objective truth. And, as if that were not enough, what we understand we then control. It is like magic, only real. We are like gods!

Deutsch asks us to imagine trying to explain the behavior of champagne bottle corks in the fridge in the kitchen of the lab next to a SETI telescope, it is impossible to explain what these bottles do if you do not explain whether there are extraterrestrial life forms or not. Explaining human minds means explaining almost everything else, too.

As soon as knowledge is at play, it becomes the important thing to explain.

Having Ideas

There is quite a bit of a lack of what I call neurophilosophy in neuroscience. There is no model or theory that in some overarching way talks about what the relationship of the brain and the world is and so forth.

Perhaps the conventional view:

wrong:

+-----------------------+

| |

| | ...

| ..<-[]<--[ ] | 3

| ^ | 2

| | |

| +--+---+ |

| | | | 1

| +------+ |

| ^ |

| | |

+-------------+---------+ brain

|

|

+-------------------- sensor data

Information processing flow

- Sensor data goes into the brain(or more precisely, the Cortex, which is seen as the 'real' brain).

- A presumable feedforward cortical -> cortical 'information processing' is 'feature extracting' information from the sensor data.

- Some theories exist of what this information processing could be (hierarchical 'grandmother neurons', 'ensemble encoding'), but I leave that for a future topic.

- It is interesting to note that the literature on object recognition centers on single neuron analysis (even 'ensemble encoding' has implicitly the idea that sup-pieces of information are encoded in neurons).

- At the same time, the rest of cognitive neuroscience focuses on how cortical areas are active and inactive.

David Deutsch would note that this is based on a flawed theory of knowledge. It is trying to induce knowledge of what happened. The same misconception is embedded in current machine learning [Why has AGI not been created yet?].

The world is big and our minds are small, we must speculate. And after the speculation has produced some candidate toy ideas, they have the chance to survive.

—

I'll just jump to my current interpretation:

updated:

+-----------------------+

| +----+ +----+ |

| | | | | |

| | | +----+ | | | meaning level

| | | | | | | |

| | A | | B | | C | |

| | | | | | | |

+--+----+-+----+-+----+-+

| +----+ +----+ | sensor level

+------------^----------+

|

|

+------------------------

sensor data

A, B, C - Expectation data structures

Ironically, perception, just like science, is about the stuff that we don't see. I think we see this in the overall anatomy of the cortex. There is much space for meaning, what lives in the meaning spaces?

The brain is not a receptacle of information. But an active constructor of the inner world. Surely, philosophers of old must have had the idea already. I am aware of Prediction processing, [Andy Clark], similarly the iterative improvements on guesses that make a controlled hallucination by Anil Seth (2021). I agree with the notion of making the top-down processes an active part of the system.

Predictive processing still has the notion of information processing flows, where some kind of top-down computers are now iteratively shaping the bottom layers or such. It does not come with a computational theory, as far as I can see, that says how the brain is making predictions.

The neuronal ensembles offer an updated view.

If neuronal ensembles are high-dimensional data structures, it is like there are puzzle pieces or perhaps space-ship amebas, made from activity. The activity represents information in the network. Like an active data structure. These are patterns completed so that a piece of information is useful. This opens the door to information mixing. And whatever the current activity, it is in turn the context for what can ignite. To explain what a data structure is, imagine that you load a file into your operating system, this is roughly the relationship of a program and its content. Observe that in order to have any useful power over the bits that make up the file, you want the operating system, to serve as a system of affordances, including being able to observe the system - for instance, navigating the file tree of the filesystem. This is the relationship of brain software to its data, too. Data must be loaded, and when it is, it is a representation of the data. And the ensembles are suited to fulfill exactly this role.

They are data structures that want to be stable (Cell assemblies and Memetic Landscapes), this might mean that they have dynamic parts or fizzle-ling parts of temporal structure.

If there is a spaceship of correlated activity that says here is a banana, and if it wants to be stable, for instance, stay alive even if you don't look at the banana. If it also represents an expectation structure when the eyes are at this position, then the sensor level represents such and such data. It is implementing object permanence for us already.

From this fragment of explanation I sort of see how this can represent the causal structures of the world, eventually. It would perhaps be useless, if not for the fact that Cell Assemblies Have Drivers For Generality and Abstraction. Whatever data structures represent the abstract notion of a room, a box, or container, or hole, a volume, a surface, or the stickiness of a surface. Such pieces of activity are more stable than others because they are useful across many situations. IIIa): The Living Software. The composability of those must work on the fly and be reliable, too. I must be able to say x is at position y, binding these 2 pieces of information. (Raw ideas: Getting A Visual Field From Selfish Memes, Creating Sync Activation).

There is something deep about the physical world I think, that makes the notion of stable information useful for describing and navigating the world. Why this is useful is perhaps a deeper problem of a field that unifies theoretical physics and epistemology.

There must be something about making elegant, "minimal and sufficient?" expectation structures, using systems of building blocks (languages). Whatever the substances, the wires, and the devices around them - they must create mechanisms that cannot help but grow into software that arranges these expectation structures on the fly, which I go ahead and label beliefs and explanations. (The Structure And Function of The Cell Assemblies Is Their ad-hoc Epistemology).

This is a very parallel mechanism. More like a kaleidoscope where all the elements update all at once - but many times per second. The dimensionality of the arrangement is reduced, just like in a Rubik's Cube the elements are forced to move with each other.

Since these stretch into "sensor levels" (Input Circuits And Latent Spaces / The Games of the Circuits), they implement an expectation about what the world is like.

Expectation instead of prediction because it is perfectly allowed to represent a counterfactual. If you look here, you will see x is a valid and useful expectation to have;

The user is offered the affordance. And this is useful whether the user looks, or not. My theory is that this collection of object ideas can be interpreted as a visual field. (They all say where and what to expect when you look at that where).

I don't think prediction is the right word. Becuase those expectations are counterfactual. It is what you could look at, not what you will look at. And I suspect it is allowed to encode a range of such expectations, too (Because something want's to be as stable as possible).

Another update, taking Murray Sherman and the thalamus people seriously:

meaning level

+-----------------------+

| +----+ +----+ |

| | | | | | ....

| | | +----+ | | |<------ affordances

| | | | | | | |<------ interpretations

| | A | | B | | C | |<------ affordances

| | | | | | | |<------ interpretations

+--+----+-+----+-+----+-+

| +----+ +----+ | sensor level

+------------^----------+

|

|

+------------------------

sensor data

A, B, C - Expectation data structures

The layout of the circuits puts motor output inside the loops. That is because the higher thalamic nuclei get inputs from layer 5 pyramidal neurons, which all branch. They make one output to motor centers, and one output to the higher thalamic nuclei.

This arrangement is strange at first, but evolutionarily satisfying, along those lines:

- A generic neo-cortex module evolved with thalamic inputs and motor outputs, thereby being immediately useful.

- The logic of the efference copy, which ethologists of old have predicted, is a strong evolutionary driver for branching the axon, back to the input nucleus, this efference copy was then input to the system again.

- Since there was a new kind of input to the system now, there was an evolutionary driver to duplicate the whole arrangement, into a higher-order input nucleus and a higher-order cortical region.

- And the input to the higher stuff is motor command data, hence the latent space of the cortex is a motor output encoding.

This is a drastic update from the current conventional view, where we have only 1 motor output module, the motor cortex. But in the updated view, all cortex is contributing to behavior. Also, the sensory cortex might be relatively generic, instead of areas being specialized for certain functions, the new view suggests a generic computational module that is repeated (and perhaps secondarily has some specialization). And all these modules are capable of making motor outputs.

The (neuro-) philosophy of Constructivism [Heinz Von Foerster, Humberto Maturana] had this idea that the mind creates the world. That perception is actively created by the mind. I think they also said that perception and action are intertwined, that one cannot see without moving the eyes and so forth. And I think it turns out that they were right. Some Memetics On Eye Movement And Maybe How That Says Where. The sensors are not a source of truth, the sensors are a resource to an explanation making system. In my view, the ideas are growing. They are biological entities (The Biology of Cell Assemblies / A New Kind of Biology)

Some strands of thinking in this direction then go on to say that we all have our own world and reality. Fallabilism is the notion that we are never right, that we can always find a deeper explanation. This also means that the truths are out there, for us to converge upon.

Since the trans-thalamic messages are all forced to make motor output at the same time, (because the layer 5 output neurons make branching axons), one layer's motor output is the input to the next layer. This is a 'poly computing' [Bongard, Levin 2022] implementation. Where the computation of one element is re-interpreted from the 'perspective?' of a new element. This arrangement mirrors the logic of an Exapatation in evolutionary theory.

(1) A character, previously shaped by natural selection for a particular function (an adaptation), is coopted for a new use—cooptation. (2) A character whose origin cannot be ascribed to the direct action of natural selection (a nonaptation), is coopted for a current use—cooptation. (Gould and Vrba 1982, Table 1)

What is a range of action from one perspective, is input data from the other perspective.

Why the range of action? Because the ensembles stretching across those circuits have memetic drivers to be stable, if they have the chance to spread to higher areas, they will want to do so. If the circuits force them to output motor data, they will have to play a game that balances their conflicting effects. If the animal moves, the situation will change, and if the ensembles represent the situation but also want to be stable, they will have to play the game of finding the smallest set of behaviors that keeps them stable.

Imagine looking at a line, the ensemble on the first layer says move your eyes like this (straight line). Because it wants the sensors to stay the same. This eye movement data is input to the second layer, which says I interpret this data as 1 line, color filled in and so forth. (something like that). Where does the color come from? From whatever color ensembles are active right now. Because the system is looking at a position, and has a certain shape/color interpretation, all these ensembles are active together and either compose via some phase-reset and synchronous activation mechanism or associate via on-the-fly plasticity. Both of these we can biologically plausbily require.

What would this mean for thought-level mental content? Perhaps it means that for instance, the affordances represent a range of thought narratives, which will incorporate their ideas or something.

Circuits and devices (like the Braitenberg thought pump) can make the ensembles play different kinds of games.

This way, if a neuronal ensemble wants to stretch across meaning levels, it will have to represent affordances at layer 5. This interpretation game is also a usability game.

Easy to imagine with eye movements again. If the banana from up top still says move here and you will see me, it knows itself how to be used. In this way, visual object memes are used by being looked at; If object memes fail to output the right eye movements, then they are worse memes. (It is a topic of development why our object memes are good).

If they are within reach, they can say grab me with your hand you will feel this weight and so forth. This way the object properties become lists of affordances. How do you understand the very large and very small, then? With analogies, I think. The sun is a ball going across the sky, atoms are billiard balls, and electrons are clouds or inkblots.

Observe that inner affordances, acts of attention exist, too. This way a thought-meme can say think me. This is the same as having a handle, a set of affordances, on the entities in your operating system. But in this paradigm, all the data structures try to figure out on their own how to be used. They are buttons with previews. And they are symbiotic with ideas that would lead up to them. This way a train of thought can have a handle on the first wagon, and the whole train would bias the system into thinking of the first wagon, if it could.

A little example of this is singing A, B, C, D, E, F, G, ….

P and O are memes, too. They have a relationship to A, and even more so to A with the tune attached.

Parallel Information Flows

- M. Sherman: An Input Nucleus To Rule Them All, Parallel Processing Flows.

- Those stacks of interpretation and affordance are all arranged in parallel.

- I think this will turn out to mean that the concepts of say 'a line here', 'a color stripe here', 'a painting here', 'I could scribble on the painting',… Are all immediately, in parallel, constraining themselves, and they all immediately go from lower detail to higher detail perhaps.

- "top-down" and "bottom-up" are then ensembles that span meaning levels - technically sweet I would say.

- Then it is not surprising that most meaning comes from something else than sensor data if one simply considers the amount of neocortex that isn't primary sensory area.

- And because of M. Sherman's A Curious Arrangement, all the meaning is knit together via action.

Notes

The edge of this expectation representation game is perhaps just when waking up, doesn't it feel like the system is booting for a moment? My interpretation is that all the neuronal ensembles need to ignite, using 'flash-drive' lookups and cues from the sensors.

"Surprise" and Confusion need to be handled, too. (also: Confusion and Socratic Wires).

Best Guesses Are Always There

György Buzsáki's neurophilosophy is rich. The notion of the pre-allocated hippocampal 'sequences' (4) is an implementation of the idea comes first.

Even an inexperienced brain has a huge reservoir of unique neuronal trajectories with the potential to acquire real-life significance but only exploratory, active experience can attach meaning to the largely pre-configured firing patterns of neuronal sequences.

The reservoir of neuronal sequences contains a wide spectrum of high-firing rigid and low-firing plastic members, that are interconnected via pre-formed rules. The strongly interconnected, pre-configured backbone, with its highly active member neurons, enables the brain to regard no situation as completely unknown.

As a result, the brain always takes its best guess in any situation and tests its most plausible hypothesis. Each situation, novel or familiar, can be matched with the highest probability neuronal state. A reflection of the brains best guess.

The brain just cannot help it. It always instantly compares relationships [the function of the hippocampus according to Buzsáki], rather than identifying explicit features.

There is no such thing as 'unknown' for the brain. Every new mountain, river or situation has elements of familiarity, reflecting previous experiences in similar situations, which can activate one of the preexisting neuronal trajectories. Thus familiarity and novelty are not complete strangers. They are related to each other and need each other to exist.

Buzsáki, G. (2019). The Brain From Inside Out. My emphasis.

—

Joshua Bach has a similar example. One remembers going into a dark cellar to fetch something as a child and having ideas of various monsters in the dark. It seems like the creatures are there in our minds. Not mere imagination, but the stuff that makes perception.

In my words, they are the meanings, beliefs, expectations, schemata, frames or situations. They exist in the mind, decoupled from the sensors. Perhaps sensors and action are "merely" resources for the ideas, to 'criticize' their alternatives. And this way, "good" best guesses are left over.

Neither the world nor the mind was a clear-cut 'ground', but grounding is an evolutionary process. And the function of brain software must be to facilitate 1) The existence of ideas 2) The criticism of ideas, in the broadest sense.

Languages, Compositionality, Abstraction

The infinite use of finite means by Wilhelm von Humboldt is what we call the means of combination in programming (5).

- Make some building blocks.

- Make rules for composing the building blocks.

- Buzsáki calls that the neuronal syntax of neuroscience.

- Chomsky calls that the universal grammar I guess.

- ???

- You have a system of expression with general, and vast

reach, perhaps with certain universalities. - I think eventually we might be able to quantify such reach, and we would be able to say that with s amount of ways of putting things together, and n amount of building blocks, you get to an exponential explosion of expressivity.

- This expressivity is at the core of natural language, and computer programming languages, and I think of the internal programming languages of brain software, too.

The means of abstraction would say that you need to be able to create new building blocks (given for a particular natural language via word coining).

This includes giving names to "frameworks" in a broad sense.

A frame in Minsky (7) is "A sort of skeleton, somewhat like an application form with many blanks or slots to be filled".

In programming, a framework might be a map or record, where the "keys" or "variables" (also 'roles') are filled with "values" (also 'fillers'). It can also be a sub-program, where some variables are substituted with particular values.

This allows the user of the language to speak of the relationships of things. And that is detail-independent one might say. (The same way information is substrate-independent, the substrate is a detail, then).

In what ways brain software represents such relationships and frames is one of the important questions.

[ ? action is how we come up with relationships in the first place. ]

[ observe that action can mean messing with the internal state of the system itself, too - internal actions ]

[ Two things are identical when changing either changes the other. ]

[ In order for anything to be stable, it has to move ]

[ ? … Then perhaps it is the ideas that change in the right way so they are stable, even when the details change, that are the abstract ideas ] [ This logic is mirrored in the so-called perisaccadic visual remapping (etc.) of visual neuroscience ]

Brian software abstractions allow us* to eventually express I see, or I understand. Without caring about the details, which are filled in somewhere else.

*) "Us" in this case means us, the hypothetical programmers inside a brain software system. Not the cognitive user of brain software, who is removed from such details.

At Some Memetics On Eye Movement And Maybe How That Says Where, I have the idea that eye movement motor data is an abstract encoding of the extent and relationships of objects in the visual field. This is also a frame because the 'line data' is allowed to be composed with any color and texture data. Making blue or red lines and so forth. A line is a thing where you can move your eyes like "this" and you still see the same thing along the way.

A competent meme can say all the ways you can make transformations (motor movements), and it is still true - it can do that by being abstract and removed from the details. Something like a high-dimensional, procedural puzzle piece, a bag of counterfactuals, a puzzle piece that fits many things.

For instance, the notion of a container fits the counterfactual notions of poor water into me and it would stay inside me, put your hand inside me and you will experience my interior,

turn me around with water inside and water will flow out of my opening. (You don't need to do these things, it can say what would happen).

There are many transformations a toddler can do with their hand muscles and so forth, perhaps animated by some alternative memes of container.

Still, container would stay true. Or be superseded by a better representation of container.

In a high-dimensional computing framework, such a puzzle piece with many tentacle arms in meaning-space should be easy to say, once some details are figured out.

Then I understand the scene perhaps simply represents the notion of a scene we understand, with the details filled in later. This rhymes with various change blindnes effects.

This one from Nancy Kanwisher and collaborators is amazing.

This hiding the details is a property of well-designed software this is essential for making the cognitive user interface magical: Magical Interfaces, The Principle of Usability.

[we can recover composition and abstraction beautifully via high dimensional computing, more to come].

This happened for genes, that somehow were able to express the realm of possible biochemistry or something. It also happened for the evo-devo genetic toolkit (Wikipedia, Sean B. Carroll 2008), I think, that can express animal body plans (universally?).

Also: Digital infinity.

What we need is an analysis of programming and software that is deep enough to include brain software. I am not done with a computational layer. I want the logic of organization of the computational layer. The elements (neuronal ensembles or high-dimensional data structures), the means of combination and the means of abstraction are the starting point, not the thing to be explained. The way architecture is relevant for understanding a building, not the bricks.

I also don't want to think of details of human cognition and say how this or that could be modeled. I want a fundamental theory, the way evolution does it for animals. The details of human cognition will come out of the elaborations of the rules of a deeper process (That's from David Deutsch).

Note that I think this probably means building in a developmental phase, that prepps the system for representing physical reality. Growing in Kantian representations style. On Development. I believe making machine intelligence and explaining brain software means standing on the shoulders of computer science (formerly just cybernetics) and neuroscience. It would be foolish to ignore the existing knowledge of computing and programming when the goal is to figure out what the biological version of it is.

Some Layers of Neurophilosophy

+----------------------+ mechanistic layer

| | |

| | | Neurons, wires, devices, transistors

+-----------------+----+

+--v---+ <---------------------------------------------------- ensembles

+------+

+----------------------+ computational layer

| |

| ^ | ? neuronal nets, conceptrons

+----+-----------------+ high dimensional computing frameworks

| +--------+ <--------------------------------------------------- data structures

| +--------+ building blocks

|

+----+-----------------+ software layer

| | |

| ^ | Memetic engine matrix, Societies of Mind (Papert, Minsky),

+-------------+--------+ Meaning Cities (Hofstadter)

| ^ | ^ |

+----+--------------+--+ systems of expression

| | ^ | | layer, layer, layer, (?) ..

+---------+------------+ The content of the software is software again

|

+ - -+ --- --+ cognition

| |

| |

+----------------------+ The user and her interface

magical - but not magic.

+-- This is biology again

|

v

+---------------------------------+

| M | C | S ... Cog |

+---------------------------------+

^ ^

| |

| |

abstraction barriers.

M - Mechanistic layer

C - Computational layer

S - Software layer

Cog - Cognition layer

A heart valve can be implemented in terms of the biological leafy portal structure, or a so-called mechanical valve,

which has a completely different mechanism, but its function is the same. Namely, to prevent blood from flowing back into a ventricle.

In general, an abstraction barrier allows a function to be expressed without mentioning the details of the implementation or mechanism.

(What an abstraction barrier is, is I think expressable by constructor theory).

That there is an abstraction barrier between the mechanistic layer and the computational layer is uncontroversial. Otherwise, you would claim that the brain is not a computer.

It is the computational paradigm the brain implements. That is currently widely assumed to be neuronal nets [Hinton, Sejnowski]. But many people feel that there must be some richer aspects of the brain's version of them. Geoffrey Hinton recently mentioned he thinks there should be fast weights, pointing to what others call the neuronal ensembles and so forth. [In conversation | Geoffrey Hinton and Joel Hellermark].

Note that Yuste hypothesizes that ensembles are created on the fly by increasing their intrinsic excitability, not the weights (Iceberg Cell Assemblies).

Sejnowski mentioned how neuromodulators modify the function of the net on the fly, and how traveling waves pose open question marks. [Steven Wolfram and Tery Sejnowski].

My idea about the computational layer is Braitenbergs "conceptron", with wires:

- A recurrent neuronal net with sparse activity, activity can survive and pattern complete (similar to Hopfield attractors)

- Ensembles are high-dimensional data structures, Ensembles associate via on-the-fly plasticity (Iceberg Cell Assemblies)

- Rich wiring is important because it says what kinds of activity you get: Getting A Visual Field From Selfish Memes, Input Circuits And Latent Spaces / The Games of the Circuits

- Ensembles are compositional: the means of combination, How To Make Use of Synchronous Activation.

- Roughly something like situation -> interpretation are the means of abstraction (Context Is All You Need?).

- Devices poke around in the the network: Tübinger Cell Assemblies, The Concept Of Good Ideas And Thought Pumps (Devices are random and merely heuristical by themselves, easy to implement once the function is understood).

- Devices can be used from inside the network, e.g. Implementing Perspective, p-lines

- This all allows for stable information with meaning (because wires). This meaning is allowed to represent abstract procedures about how to use the computer this software runs on.

- Note that this doesn't say what the mind is, it says what the building blocks are, which must somehow create the mind during development.

The Computational Layer And The Software Layer

Note that it is not clear that the computational paradigm of the brain is the best computational paradigm to make minds either.

Idea 1:

The brain imperfectly implements the abstract notion of a neuronal net.

This is like saying

The heart imperfectly implements the abstract notion of a blood pump.

But there are infinite kinds of possible computational layers, too. And if they are universal, they all could be used to implement brain software.

Many things have been suggested for this computational layer. Including chemical computing at the synapses [Aaron Sloman], and quantum computing at the microtubules [Roger Penrose].

Those things might be true for all we care about when analyzing the software layer.

Because (that is theoretically provable or proven I think), every software can be implemented in terms of every computational paradigm. If that computational paradigm is Turing complete.

—

You do not explain a building in terms of building blocks. You explain a building in terms of architecture. The bricks are an afterthought.

A neuronal net is a computational construct that is generally powerful, it can be used to implement a calculator, face detector, or anything else - it is universal.

It would be a strange inversion of reasoning to say that an operating system emerges from the transistors. The operating system of the brain, which we can label mind must self-assemble and grow. The computational paradigm then, must facilitate a self-assembling software. However, the software is not explained in terms of the computational layer. (bricks and architecture, data structures and operating system relationship).

The principles of the mind are its organization. Mechanistic and computational layers are not sufficient to provide a satisfying explanation of the mind.

The Microcosm Is a New Landscape

Braitenberg was inspired by Hebb, who emphasized how assemblies can represent causality. (Wire with the stuff that activates you. That is B follows A). He called it the microcosm, which resembles the causalities in the world, the macrocosm.

This microcosm is an instance of what I call a theory of mentality. A mentality model says how the system can represent its perception states to itself, how that relates to the world, and how it can do so in the absence of sensor input.

For this reason, the discovery of intrinsic activity is a very important flip in the philosophy of neuroscience. Because it is not the case that the sensors trigger an information cascade in the brain, like dominos [Sherrington's reflexes]. What is true is that the brain is active without sensor input.

Activity that is independent of the sensory world could enable it to build a representation of the world, which can exist and operate on its own. This virtual universe may be what we call the mind.

Yuste 2

Let's be inspired by the computational layer, to think about the functioning of the software layer.

Ensembles are stable pieces of information. Marletto calls information that knows how to be stable is knowledge. Hopfield attractors are a perspective on stableness, too. In a way a pattern complete is the procedural way of saying information that can reproduce.

Of course, reproduction doesn't need to go horizontal with copies. Reproduction is allowed to go vertical through time and even skip time steps. I.e. if you are an attractor, you can be ignited ('fallen into') in the future.

If one takes this seriously, we see that this is a computational paradigm that makes it easy for knowledge to exist in the network. Sounds like a play on words, what knowledge? In the first place, the knowledge of the ensembles merely says how to be stable in the network. I.e. are selfish memes, with extended phenotypes [Dawkins].

Why this is useful is easy to see with the visual field banana from above. The stableness of your object memes is already an implementation of object permanence.

This stable information would also be useful if it represents "patterns". For instance, a bird will invariably start moving around when I look at it. We can grow stable information via a natural selection mechanism.

We can observe how this knowledge can survive, or not survive. The context of the network is criticizing all knowledge pieces, simply by saying what is supported.

This landscape is a landscape populated by sort of living things. This makes the ideas have agendas on their own. The ideas will then represent sort of their range of possible implications on their own. And this looks like a nice building material to make a mind that comes up with explanations.

In this kind of epistemology-biology-software, you can ask Cui bono? the same way you can do for organisms.

The ideas have an agenda, to be stable in the mind. That might mean that they want to represent pieces of what we label beliefs. This memetics is a superset of prediction theories of the mind. Because the ideas will want to represent pieces of information that fit the sensors. But they only care about being stable, not representing actual truths about the world. The ideas and the brain stand in a certain conflict because of this.

Names: ensembles to emphasize their mechanistic and computational implementation, data structures to emphasize how they can be understood in terms of computer science, ideas to be as general as possible, memes to emphasize their agency and their nature of being replicators, attractors to have a common language with computational neuroscientists, playdough gems when thinking about how you could code using them, explanation structures to emphasize their structure and function, or software entities, knowledge representations to emphasize how they are knowledge.

How to get users with magical interfaces out of this is the topic of Memetic Engines Create Competence Hierarchies Up To User Illusions, where I say that the software might be implementing a magic banana maker matrix meme-engine. This is a general mechanism that should populate a computer with competent software entities that use the computer.

In order for this to work the mechanism requires a so called memetic engine. This memetic engine needs to make variations of knowledge possible. A natural selection mechanism is mirrored in the synaptic 'overproduction' and subsequent pruning of synapses. Presumably via this process, a network goes from very high possibility spaces to a more narrow possibility space (which is more useful seems).

- Why are more synapses not better?

- Why would neurons have a skip rate or noise? ()

- Why can you not heal neuronal tissue?

- Why is the dimensionality of the net reduced, e.g. cortical columns are correlated?

- What is the purpose of sleep?

- Why is there no illusion that makes me see multiple colors in the same spot?

Random firing rate and random skip rate are the basic implementations of a memetic engine, so here the high-level theory is yielding explanations for what to see empirically. With this view, it is not surprising that random noise in neuronal nets makes them more competent for instance.

Niche Fitting

Imagine an alternative history, where people still speak Victorian style. And where Darwin's and Wallace's theories were ridiculed and forgotten. Like tiny fireflies of insight, burning away in the dark.

The people of this world moved on without a theory of life;

'The mechanistic and architectural power immanent in protein biochemistry' from Warren McLoccuch and Walter Tipps in 1943. Pathed the way of seeing the cells and organisms as biochemistry devices.

Many biologists think that it is the job of biology to explain how the biochemistry of organisms is 'fitting the niche'. Their implicit bio philosophy is that 'fitting the niche' is a theory of life, and questioning how biochemistry relates to animism and complex adaptations is seen as a problem for philosophers.

Some philosophers speak of Élan vital, the substance of life. Some say that Élan vital can never be understood, that some mysteries are beyond the human intellect. Some say there is a substance dualism between life stuff and so-called ordinary matter. An animal is something else than a rock, and yet one is alive and one is not. How does the biochemistry give rise to aliveness? Some argue that aliveness is perhaps an epiphenomenon, along with the ride of the real thing that is happening, biochemistry.

Some biologists think that the mystery will be solved in 100 years hence perhaps not in my lifetime. They have searched and searched, and yes the ship is made from wooden planks on the left and the right. Now they are going to look if there are wooden planks at the front and back, too.

The problem of aliveness was always there and then labeled the hard problem of aliveness by Chavid Dalmers in the 2000s.

The field of machine proteomics is inspired by the 1943 McLuccuch paper, and implements statistical fitting of 'proteomic nets'. They started tinkering with nanotech, which mimics the function of proteins and other elements of cells. They created statistical algorithms and found algebraic tricks. The valley-slope mechanism allows for simulating the biochemistry of organisms, tweaking each protein parameter at a time, and 'fitting it to a niche'.

They have some impressive results and the field of artificial biology is a synonym for machine proteomics in the mind of the public. Artificial animals that do things like brewing beer and so-and-so.

In some ways, the impressive results of machine proteomics are more confusing than useful.

The prevailing understanding is that the biochemistry of organisms is implementing something like a proteomics net with learning. Those are networks of proteins, which interact with each other and produce the properties of cells. They are fitted to a niche via the process that tweaks the connections in the protein net called learning.

Many biologists are trying to use machine proteomics when they analyze organisms and find striking analogies between artificial and biological proteomics.

Biochemistry was once a deep topic for cyberneticians, but this field virtually doesn't exist anymore. Biochemistry became biochemistry science in university courses, where students are trained to contribute to industry, using artificial proteomics, and making money for businesses.

It looks like this organ is doing biochemistry for x. Is a respectable thing to say, even though replaced the word biochemistry with magic and get the same information content, yet, such rhetoric is required to be a respectable biologist.

For instance: It looks like leaves are doing the biochemistry of the tree. This is taken as a respectable and sensible thing to say. Students who ask but what is the biochemistry? are placated: I don't have a proteomics diagram for the biochemistry of a tree. The field simply is not this far.

—

Curious person: But why should this proteomic net be this proteomic net?

Scientist: Because this way the organism is doing exactly the kind of biochemistry that allows it to survive the challenges of the environment. Organisms are environment overcomers, and they make biochemistry that helps them survive.

Curious person: Alan Ruting's universality of biochemistry shows that all proteomics do all kinds of biochemistry. Doesn't this mean that there is something else to explain? Something that says how the proteomics is organized to do its biochemistry?

Scientist: People like Larvin Linsky in the 50s-70s have tried to come up with an organization of biochemistry, but they have failed. Because in the real world, biological niches are more complicated than the toy niches of Linsky. That is also the bitter lesson of machine proteomics. You can only get better organisms by scaling up the compute and niche data.

Curious person: But organisms in reality do not have millions upon millions of niche data points, from which they find statistical correlations of proteomic sheets that fit. The moment an organism is born, something already talks about what kind of proteomic net will be useful for it in its niche.